Today, we are announcing updates to a number of PyTorch libraries, alongside the PyTorch 1.8 release. The updates include new releases for the domain libraries including TorchVision, TorchText and TorchAudio as well as new version of TorchCSPRNG. These releases include a number of new features and improvements and, along with the PyTorch 1.8 release, provide a broad set of updates for the PyTorch community to build on and leverage.

Some highlights include:

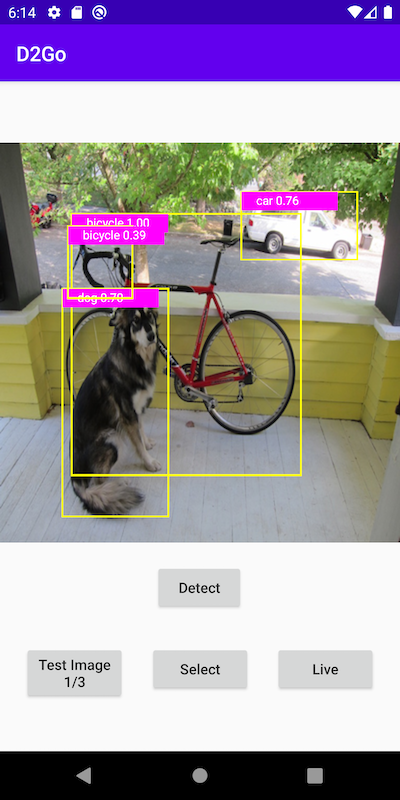

- TorchVision - Added support for PyTorch Mobile including Detectron2Go (D2Go), auto-augmentation of data during training, on the fly type conversion, and AMP autocasting.

- TorchAudio - Major improvements to I/O, including defaulting to sox_io backend and file-like object support. Added Kaldi Pitch feature and support for CMake based build allowing TorchAudio to better support no-Python environments.

- TorchText - Updated the dataset loading API to be compatible with standard PyTorch data loading utilities.

- TorchCSPRNG - Support for cryptographically secure pseudorandom number generators for PyTorch is now stable with new APIs for AES128 ECB/CTR and CUDA support on Windows.

Please note that, starting in PyTorch 1.6, features are classified as Stable, Beta, and Prototype. Prototype features are not included as part of the binary distribution and are instead available through either building from source, using nightlies or via compiler flag. You can see the detailed announcement here.

TorchVision 0.9.0

[Stable] TorchVision Mobile: Operators, Android Binaries, and Tutorial

We are excited to announce the first on-device support and binaries for a PyTorch domain library. We have seen significant appetite in both research and industry for on-device vision support to allow low latency, privacy friendly, and resource efficient mobile vision experiences. You can follow this new tutorial to build your own Android object detection app using TorchVision operators, D2Go, or your own custom operators and model.

[Stable] New Mobile models for Classification, Object Detection and Semantic Segmentation

We have added support for the MobileNetV3 architecture and provided pre-trained weights for Classification, Object Detection and Segmentation. It is easy to get up and running with these models, just import and load them as you would any torchvision model:

import torch

import torchvision

# Classification

x = torch.rand(1, 3, 224, 224)

m_classifier = torchvision.models.mobilenet_v3_large(pretrained=True)

m_classifier.eval()

predictions = m_classifier(x)

# Quantized Classification

x = torch.rand(1, 3, 224, 224)

m_classifier = torchvision.models.quantization.mobilenet_v3_large(pretrained=True)

m_classifier.eval()

predictions = m_classifier(x)

# Object Detection: Highly Accurate High Resolution Mobile Model

x = [torch.rand(3, 300, 400), torch.rand(3, 500, 400)]

m_detector = torchvision.models.detection.fasterrcnn_mobilenet_v3_large_fpn(pretrained=True)

m_detector.eval()

predictions = m_detector(x)

# Semantic Segmentation: Highly Accurate Mobile Model

x = torch.rand(1, 3, 520, 520)

m_segmenter = torchvision.models.segmentation.deeplabv3_mobilenet_v3_large(pretrained=True)

m_segmenter.eval()

predictions = m_segmenter(x)

These models are highly competitive with TorchVision’s existing models on resource efficiency, speed, and accuracy. See our release notes for detailed performance metrics.

[Stable] AutoAugment

AutoAugment is a common Data Augmentation technique that can increase the accuracy of Scene Classification models. Though the data augmentation policies are directly linked to their trained dataset, empirical studies show that ImageNet policies provide significant improvements when applied to other datasets. We’ve implemented 3 policies learned on the following datasets: ImageNet, CIFA10 and SVHN. These can be used standalone or mixed-and-matched with existing transforms:

from torchvision import transforms

t = transforms.AutoAugment()

transformed = t(image)

transform=transforms.Compose([

transforms.Resize(256),

transforms.AutoAugment(),

transforms.ToTensor()])

Other New Features for TorchVision

- [Stable] All read and decode methods in the io.image package now support:

- Palette, Grayscale Alpha and RBG Alpha image types during PNG decoding

- On-the-fly conversion of image from one type to the other during read

- [Stable] WiderFace dataset

- [Stable] Improved FasterRCNN speed and accuracy by introducing a score threshold on RPN

- [Stable] Modulation input for DeformConv2D

- [Stable] Option to write audio to a video file

- [Stable] Utility to draw bounding boxes

- [Beta] Autocast support in all Operators Find the full TorchVision release notes here.

TorchAudio 0.8.0

I/O Improvements

We have continued our work from the previous release to improve TorchAudio’s I/O support, including:

- [Stable] Changing the default backend to “sox_io” (for Linux/macOS), and updating the “soundfile” backend’s interface to align with that of “sox_io”. The legacy backend and interface are still accessible, though it is strongly discouraged to use them.

- [Stable] File-like object support in both “sox_io” backend, “soundfile” backend and sox_effects.

- [Stable] New options to change the format, encoding, and bits_per_sample when saving.

- [Stable] Added GSM, HTK, AMB, AMR-NB and AMR-WB format support to the “sox_io” backend.

- [Beta] A new

functional.apply_codecfunction which can degrade audio data by applying audio codecs supported by “sox_io” backend in an in-memory fashion. Here are some examples of features landed in this release:

# Load audio over HTTP

with requests.get(URL, stream=True) as response:

waveform, sample_rate = torchaudio.load(response.raw)

# Saving to Bytes buffer as 32-bit floating-point PCM

buffer_ = io.BytesIO()

torchaudio.save(

buffer_, waveform, sample_rate,

format="wav", encoding="PCM_S", bits_per_sample=16)

# Apply effects while loading audio from S3

client = boto3.client('s3')

response = client.get_object(Bucket=S3_BUCKET, Key=S3_KEY)

waveform, sample_rate = torchaudio.sox_effects.apply_effect_file(

response['Body'],

[["lowpass", "-1", "300"], ["rate", "8000"]])

# Apply GSM codec to Tensor

encoded = torchaudio.functional.apply_codec(

waveform, sample_rate, format="gsm")

Check out the revamped audio preprocessing tutorial, Audio Manipulation with TorchAudio.

[Stable] Switch to CMake-based build

In the previous version of TorchAudio, it was utilizing CMake to build third party dependencies. Starting in 0.8.0, TorchaAudio uses CMake to build its C++ extension. This will open the door to integrate TorchAudio in non-Python environments (such as C++ applications and mobile). We will continue working on adding example applications and mobile integrations.

[Beta] Improved and New Audio Transforms

We have added two widely requested operators in this release: the SpectralCentroid transform and the Kaldi Pitch feature extraction (detailed in “A pitch extraction algorithm tuned for automatic speech recognition”). We’ve also exposed a normalization method to Mel transforms, and additional STFT arguments to Spectrogram. We would like to ask our community to continue to raise feature requests for core audio processing features like these!

Community Contributions

We had more contributions from the open source community in this release than ever before, including several completely new features. We would like to extend our sincere thanks to the community. Please check out the newly added CONTRIBUTING.md for ways to contribute code, and remember that reporting bugs and requesting features are just as valuable. We will continue posting well-scoped work items as issues labeled “help-wanted” and “contributions-welcome” for anyone who would like to contribute code, and are happy to coach new contributors through the contribution process.

Find the full TorchAudio release notes here.

TorchText 0.9.0

[Beta] Dataset API Updates

In this release, we are updating TorchText’s dataset API to be compatible with PyTorch data utilities, such as DataLoader, and are deprecating TorchText’s custom data abstractions such as Field. The updated datasets are simple string-by-string iterators over the data. For guidance about migrating from the legacy abstractions to use modern PyTorch data utilities, please refer to our migration guide.

The text datasets listed below have been updated as part of this work. For examples of how to use these datasets, please refer to our end-to-end text classification tutorial.

- Language modeling: WikiText2, WikiText103, PennTreebank, EnWik9

- Text classification: AG_NEWS, SogouNews, DBpedia, YelpReviewPolarity, YelpReviewFull, YahooAnswers, AmazonReviewPolarity, AmazonReviewFull, IMDB

- Sequence tagging: UDPOS, CoNLL2000Chunking

- Translation: IWSLT2016, IWSLT2017

- Question answer: SQuAD1, SQuAD2

Find the full TorchText release notes here.

[Stable] TorchCSPRNG 0.2.0

We released TorchCSPRNG in August 2020, a PyTorch C++/CUDA extension that provides cryptographically secure pseudorandom number generators for PyTorch. Today, we are releasing the 0.2.0 version and designating the library as stable. This release includes a new API for encrypt/decrypt with AES128 ECB/CTR as well as CUDA 11 and Windows CUDA support.

Find the full TorchCSPRNG release notes here.

Thanks for reading, and if you are excited about these updates and want to participate in the future of PyTorch, we encourage you to join the discussion forums and open GitHub issues.

Cheers!

Team PyTorch